INT8 and FP16 is never used in Scientific calculations.

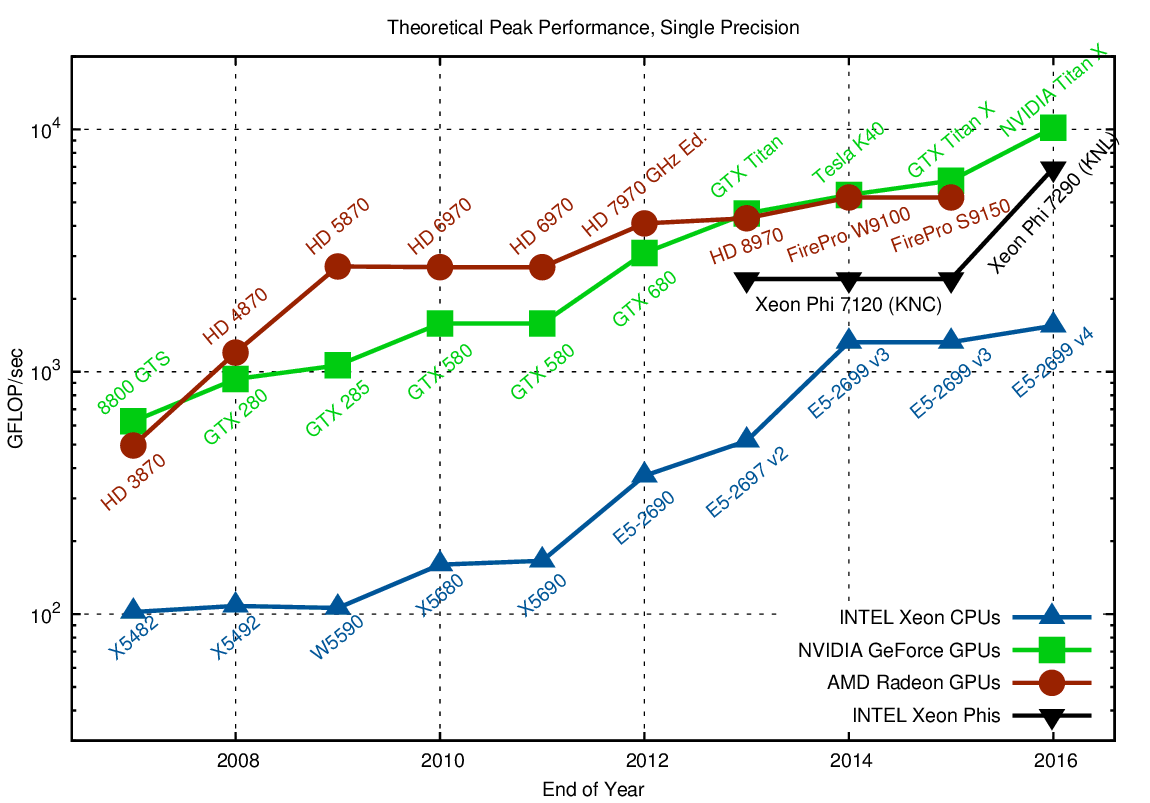

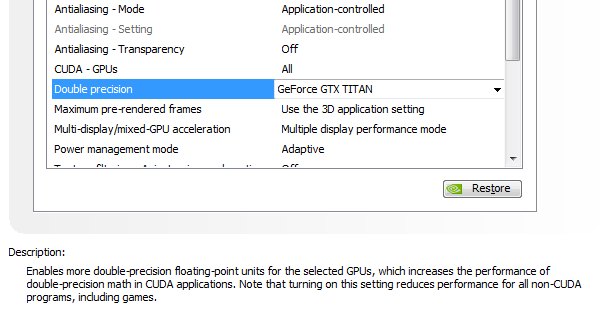

FP32 to FP16 and FP64Ĭonverting FP32 to lower precision like INT32, INT8, FP16 and others involves a loss of accuracy. The activations, weights and input are in FP32.Ĭonverting activations and weights to lower precision like INT8 is an optimization technique. * FP32 in Deep Learning modelsįP32 is the most common datatype in Deep Learning and Machine Learning model. INT8 and other types are supported in languages like C and C++. FP64 is used for high precision calculations while lower precision like INT8 is not available as all programming languages. In most high level programming language, the default numberic type is FP32. * FP32 is the default floating datatype in Programming Languages And with support for bfloat16, INT8, and INT4, these third-generation Tensor Cores create incredibly versatile accelerators for both AI training. Even standard Programming Languages supported FP32 as the default float datatype. The NVIDIA Ampere architecture Tensor Cores build upon prior innovations by bringing new precisionsTF32 and FP64to accelerate and simplify AI adoption and extend the power of Tensor Cores to HPC. * FP32 is supported in all x86 CPUs and NVIDIA GPUsįP32 is the default size of float for all calculations and was in use in Deep Learning models since the beginning. PyTorch supports FP32 as the default float datatype: torch.float TensorFlow supports FP32 as a standard Datatype: tf.float32

FP64 PROPS SOFTWARE

FP32 is the default floating datatype in Programming LanguagesįP32 is supported in all major Deep Learning Inference software.FP32 is supported in all x86 CPUs and NVIDIA GPUs.FP32 is supported in all major Deep Learning Inference software.For CDNA 2, they’ve been expanded to allow full-speed FP64 matrix operation, bringing them. In FP32, 9 bits are used for range and 23 bits are used for accuracy/ decimal part. The launch of the MI210 also marks the introduction of AMD’s improved matrix cores into a PCIe card. In short, it determines the range and accuracy of floating point numbers.WARNING: Cancer and Reproductive Harm - .gov. This number is stored internally using 32 bits. Using reduced precision levels can accelerate data transfers rates,increase application performance, and reduce power consumption, especially on GPUs with Tensor Core support for mixed-precision. So, a floating point number say 1.92e-4 is same as 0.000192 The floating point number becomes X.YeE which is say as X.Y * 10^E. There are 32 bits in FP32 which are divided as follows from left to right:Ī floating point number is represented as having two components: Less bits means reducing training/ inference time (impacts arithmetic and network bandwidth).More bits means more accuracy (results need to be reasonably accurate).Less bits means less memory consumption (size of data).The size of the floating point format impacts the following: Introduction to FP32 (Floating point 32 bits)įP32 is, also, known as Single precision floating point format. Introduction to FP32 (Floating point 32 bits).FP32 is the most widely used data format across all Machine Learning/ Deep Learning applications. a/src/gallium/drivers/nouveau/codegen/nv50_ir_driver.FP32 is a FP32 Floating point data format for Deep Learning where data is represented as a 32-bit floating point number.

Removes one source of variants kept byĭiff -git a/src/gallium/drivers/nouveau/codegen/nv50_ir_driver.h b/src/gallium/drivers/nouveau/codegen/nv50_ir_driver.h Uses the same technique as for nvc0 of fixups before upload, andĮvicting in case of state change. Nv50: allow per-sample interpolation to be forced via rast

0 kommentar(er)

0 kommentar(er)